I’ve installed 4 NVMe SSDs into my ClusterBox. None of the Blade 3s recognize them. lsblk only shows the eMMC. I see CONFIG_BLK_DEV_NVME=y in /proc/config.gz so they should be recognized? The SSDs in question are these. Is there some reason they wouldn’t be compatible?

My Blade 3 Case arrived today. An NVMe SSD does not work in it either. The same NVMe SSD is recognized and available if I put it in an external USB-C case.

Further details:

An ASMedia 1166 in the M.2 slot of the Blade 3 Case expansion card also does not work.

A Marvell 88SE9215 in the mini-PCIe slot does work.

Hi JGiszczak, connecting an NVMe SSD to the Cluster Box currently uses automatic PCIe Mux switching to redirect two PCIe 3.0 lanes from Blade 3. However, the system of Blade 3 might not load the correct driver automatically. We’re actively working on this functionality to ensure seamless driver loading.

As a temporary solution, you can flash this firmware to your Blade 3 board. This will enable it to recognize the NVMe SSD when installed in the Cluster Box. Please note that this is a temporary solution, and automatic driver loading will be available in a future firmware update.

We understand you’re having trouble using an NVMe SSD or ASMedia 1166 with your Blade 3 Case. To help diagnose the issue, could you please confirm if you’re using the latest firmware available on the Mixtile Docs website? Our tests have shown that NVMe SSDs and ASMedia 1166 are recognized correctly with the latest firmware. For the mini-PCIe slot, currently we only support some intel Wi-Fi modules be default, if you want to use Marvel 88SE9215, you may need to set up the correct driver. You could refer to this page for the supported mini-PCIe module.

The Rockchip Wiki says the only way to enter Bootrom/Maskrom mode when the chip is booting from eMMC is to short eMMC CLK to GND. Is this still true? I’m not at all comfortable doing that, so loading that image doesn’t seem feasible.

I have no difficulty writing a new kernel image to the appropriate partition however. Can the kernel image be provided independently of the OS image?

Hi JGiszczak, the new kernel image can be downloaded from here: clusterbox-blade3-debian-kernel-240126.img

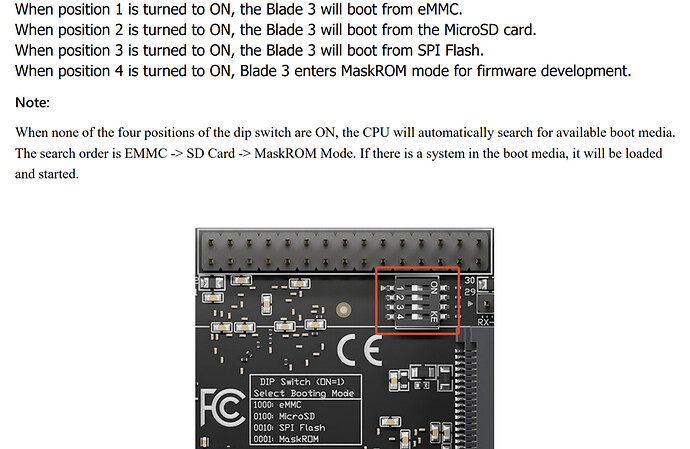

it’s not necessary to short those two pins to enter MaskROM mode. There is a DIP switch on Blade 3 and you could use it to enter MaskROM mode easily. You can refer to the user manual for more information or check the screenshot below:

I had forgotten the DIP switches. Thank you for the reminder.

With this version of the kernel, an NVMe SSD is visible on Node 4 of Clusterbox (lsblk shows nvme0n1 on Node 4). The same model of NVMe SSD is not visible on Nodes 2 or 3. I have not tried this kernel version on Node 1 yet, as my node 1 runs a custom kernel.

In addition, my standalone Blade 3 in a Blade 3 Case also does not recognize the same model NVMe SSD.

Hi JGiszczak, I’d like to assist you with the NVMe SSD visibility issue on Blade 3. To troubleshoot, could you try moving Blade 3 from Node 4 to either Node 2 or 3 and see if the SSD becomes detectable? The current description doesn’t provide enough information to pinpoint the exact cause.

If you’re open to it, adding me on Skype (live:aitfreedom) would allow for more efficient collaboration and faster resolution.

For a standalone Blade 3 setup with NVMe SSD, you can directly download the standard Debian or Ubuntu image from the Mixtile website: blade-3-download-images.

I’ve swapped Nodes 3 and 4. Now Node 3 can see nvme0n1 and Node 4 can not. This despite running identical versions of the kernel. The OS installations aren’t perfectly identical but the differences are trivial, as seen here:

mixtile@blade31:~$ ./compare_ubuntu_apt.pl blade33-package.lst blade34-package.lst

----------------------------------

MISSING IN blade34-package.lst

----------------------------------

+ gir1.2-gtksource-3.0 : 3.24.11-2

+ libcanberra-gtk3-module : 0.30-7

+ libgtksourceview-3.0-1 : 3.24.11-2

+ libgtksourceview-3.0-common : 3.24.11-2

+ meld : 3.20.2-2

+ patch : 2.7.6-7

----------------------------------

MISSING IN blade33-package.lst

----------------------------------

- inetutils-tools : 2:2.0-1+deb11u2

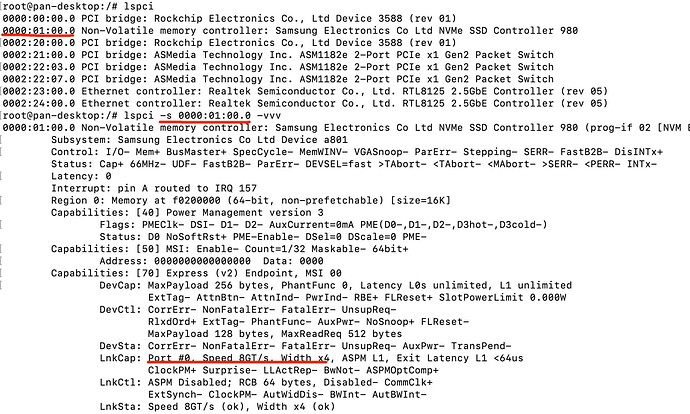

Hi JGiszczak, it seems there may be some issue with the PCIe connection of Blade 3 in Node 3. Could you kindly try to check the PCIe connection of Blade 3 by the below steps? Step 1 - Flash the Ubuntu firmware to Blade 3. Step 2 - Connect the U.2 to M.2 adapter board with NVMe SSD to this standalone Blade 3. Step 3 - Power on, use the command “lspci” to get the ID of the SSD. Then use the command “lspci -s [ID of SSD] -vvv” to check if all 4 lanes of PCIe are working normally.

To start, here are the results from a blade in slot 3 in the cluster box:

0001:11:00.0 Non-Volatile memory controller: Device 1ed0:2283 (prog-if 02 [NVM Express])

Subsystem: Device 1ed0:2283

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 156

Region 0: Memory at f1210000 (64-bit, non-prefetchable) [size=16K]

Expansion ROM at f1200000 [virtual] [disabled] [size=64K]

Capabilities: [80] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0.000W

DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #1, Speed 8GT/s, Width x4, ASPM L0s L1, Exit Latency L0s unlimited, L1 unlimited

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x2 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

I will try removing that blade and using the U.2 to M.2 adapter board later today.

The blade that could see an SSD in the cluster box can not see an SSD when removed from the cluster box and attached to the M.2 adapter board. After putting it back into the cluster box, it can see an SSD again.

Hi JGiszczak, could you kindly try to reinstall the SSD into the M.2 adapter board? Maybe there is a connection issue with the SSD between the M.2 connector. One more thing, when you attached the M.2 adapter board with Blade 3, did you use the Ubuntu system for Blade 3? As the customized Debian system kernel cannot see an SSD when using an M.2 adapter board.

I have removed and reinstalled the SSD on the M.2 adapter board. It made no difference. So far as I know there’s no way to poorly install an SSD if the retaining screw can be fastened, and I could.

I’m using the default Debian distribution that was factory installed and the kernel image linked above, 240126.img. I have installed my custom compiled kernel only on Node 1 so far. Neither my custom kernel nor the 240126.img can reliably route the PCIe lanes on any node except node 4, and then only when it is installed into the Cluster Box and only 2 lanes. When the same node is attached to the M.2 adapter outside of the Cluster Box, it is unable to route lanes properly.

I did buy and install 5 NVMe SSDs. Four in the Cluster Box and one on the M.2 adapter board. I think it is unlikely that I installed only one of them correctly.

Maybe there is some confusion in my previous replies. If you used the 240126 kernel image, then it cannot work with the M.2 adapter board. You have to use the Ubuntu image from the Mixtile website here: blade-3-download-images. When using Blade 3 in the Cluster Box, two lanes of PCIe will work as RC(Root Complex) which connects with NVMe SSD if there is NVMe SSD installed, and the rest two lanes of PCIe will work as EP(Endpoint) which connects to the PCIe switcher. If there is no NVMe SSD installed, all four lanes of PCIe will work as EP(Endpoint) in the Cluster Box. When using Blade 3 standalone with an M.2 adapter board, all four lanes of PCIe will work as RC(Root Complex). So the PCIe settings are different in these two situations. The current image cannot load the correct settings automatically according to these two situations, and this feature is still under development. Please use the Ubuntu image when you want to use the M.2 adapter board and use the Debian image with the 240126 kernel when using in Cluster Box.

I wrote the Ubuntu image to a microSD card, inserted it into my Blade 3 with the M.2 adapter attached and changed DIP switch 2 to On. It did not boot. It did nothing. The blue power LEDs came on but nothing ever appeared on the monitor or the network. I also attempted to boot the microSD card with no mini-PCIe card installed and without the M.2 adapter connected and the behavior was the same. No response.

I changed the DIP switch back and booted into Debian on the eMMC and checked and the microSD card is not recognized.

Hi JGiszcak, we assume you follow the instruction of Mixtile Docs to write the Ubuntu image to the microSD card. Could you double-check this? As the Ubuntu image is Rockchip format firmware, you cannot use BalenaEtcher or a similar general tool to write the image to a MicroSD card, you have to use Rockchip Create Upgrade Disk Tool.